Virtual Memory (VM) Concept is similar to the Concept of Cache Memory. While Cache solves the speed up requirements in memory access by CPU, Virtual Memory solves the Main Memory (MM) Capacity requirements with a mapping association to Secondary Memory i.e Hard Disk. Both Cache and Virtual Memory are based on the Principle of Locality of Reference. Virtual Memory provides an illusion of unlimited memory being available to the Processes/ Programmers.

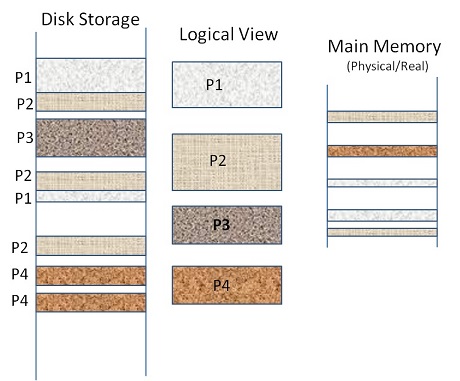

In a VM implementation, a process looks at the resources with a logical view and the CPU looks at it from a Physical or real view of resources. Every program or process begins with its starting address as ‘0’ ( Logical view). However, there is only one real '0' address in Main Memory. Further, at any instant, many processes reside in Main Memory (Physical view). A Memory Management Hardware provides the mapping between logical and physical view.

VM is hardware implementation and assisted by OS’s Memory Management Task. The basic facts of VM are:

- All memory references by a process are all logical and dynamically translated by hardware into physical.

- There is no need for the whole program code or data to be present in Physical memory and neither the data or program need to be present in contiguous locations of Physical Main Memory. Similarly, every process may also be broken up into pieces and loaded as necessitated.

- The storage in secondary memory need not be contiguous. (Remember your single file may be stored in different sectors of the disk, which you may observe while doing defrag).

- However, the Logical view is contiguous. Rest of the views are transparent to the user.

Virtual Memory Design factors

Any VM design has to address the following factors choosing the options available.

- Type of implementation – Segmentation, Paging, Segmentation with Paging

- Address Translation – Logical to Physical

- Address Translation Type – Static or Dynamic Translation

- Static Translation – Few simpler programs are loaded once and may be executed many times. During the lifetime of these programs, nothing much changes and hence the Address Space can be fixed.

- Dynamic Translation – Complex user programs and System programs use a stack, queue, pointers, etc., which require growing spaces at run time. Space is allotted as the requirement comes up. In such cases, Dynamic Address Translation is used. In this chapter, we discuss only Dynamic Address Translation Methods.

- A Page/Segment table to be maintained as to what is available in MM

- Identification of the Information in MM as a Hit or Page / Segment Fault

- Page/Segment Fault handling Mechanism

- Protection of pages/ Segments in Memory and violation identification

- Allocation / Replacement Strategy for Page/Segment in MM –Same as Cache Memory. FIFO, LIFO, LRU and Random are few examples.

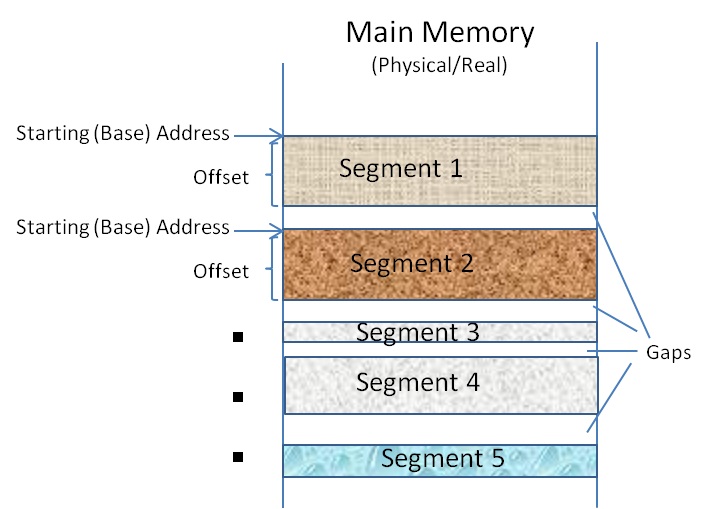

Segmentation

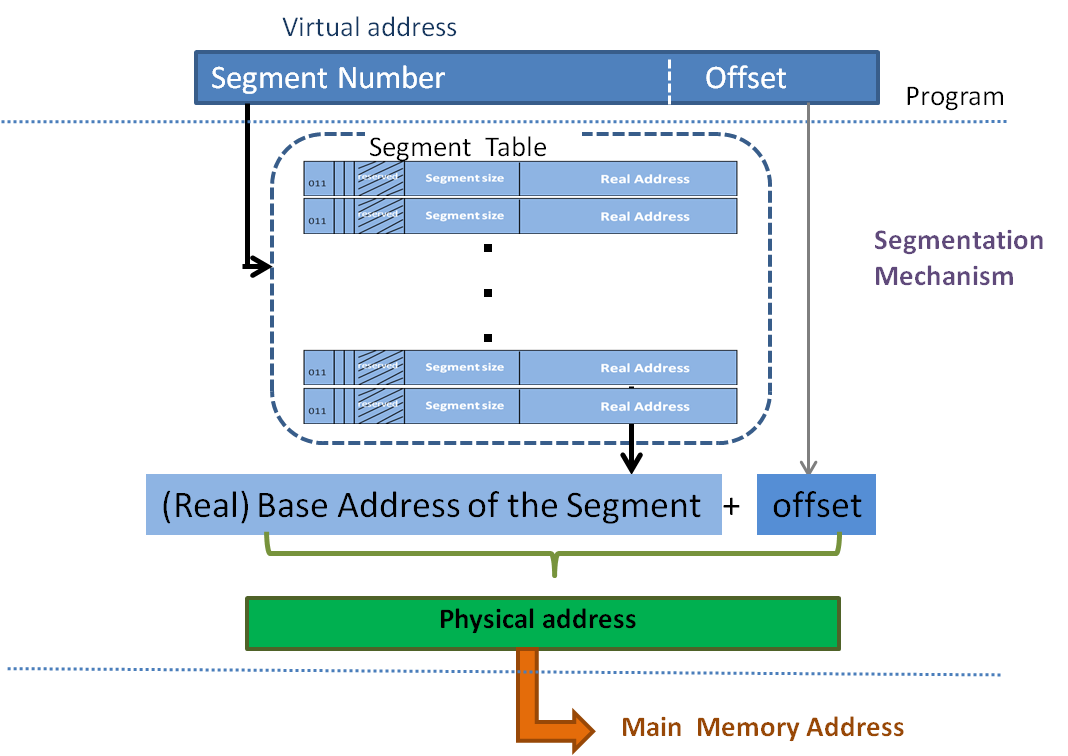

A Segment is a logically related contiguous allocation of words in MM. Segments vary in length. A segment corresponds to logical entities like a Program, stack, data, etc. A word in a segment is addressed by specifying the base address of the segment and the offset within the segment as in figure 19.2.

A segment table is required to be maintained with the details of those segments in MM and their status. Figure 19.3 shows typical entries in a segment table. A segment table resides in the OS area in MM. The sharable part of a segment, i.e. with other programs/processes are created as a separate segment and the access rights for the segment is set accordingly. Presence bit indicates that the segment is available in MM. The Change bit indicates that the content of the segment has been changed after it was loaded in MM and is not a copy of the Disk version. Please recall in Multilevel hierarchical memory, the lower level has to be in coherence with the immediately higher level. The address translation in segmentation implementation is as shown in figure 19.4. The virtual address generated by the program is required to be converted into a physical address in MM. The segment table help achieve this translation.

Generally, a Segment size coincides with the natural size of the program/data. Although this is an advantage on many occasions, there are two problems to be addressed in this regard.

- Identifying a contiguous area in MM for the required segment size is a complex process.

- As we see chunks are identified and allotted as per requirement. There is a possibility that there may be some gaps of memory in small chunks which are too small to be allotted for a new segment. At the same time, the sum of such gaps may become huge enough to be considered as undesirable. These gaps are called external fragmentation. External fragments are cleared by a special process like Compaction by OS.

Paging

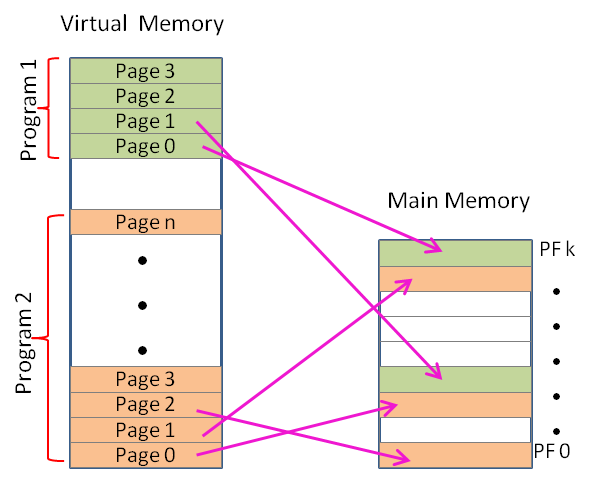

Paging is another implementation of Virtual Memory. The logical storage is marked as Pages of some size, say 4KB. The MM is viewed and numbered as page frames. Each page frame equals the size of Pages. The Pages from the logical view are fitted into the empty Page Frames in MM. This is synonymous to placing a book in a bookshelf. Also, the concept is similar to cache blocks and their placement. Figure 19.5 explains how two program’s pages are fitted in Page Frames in MM. As you see, any page can get placed into any available Page Frame. Unallotted Page Frames are shown in white.

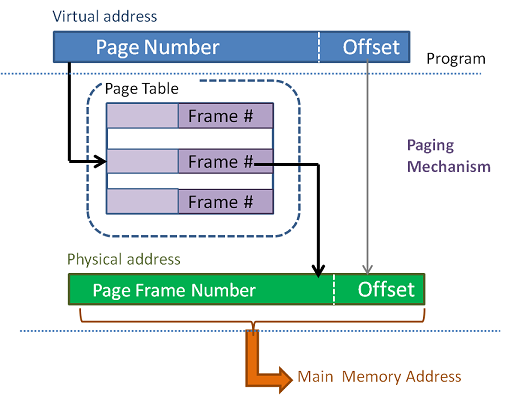

This mapping is necessary to be maintained in a Page Table. The mapping is used during address translation. Typically a page table contains virtual page address, corresponding physical frame number where the page is stored, Presence bit, Change bit and Access rights ( Refer figure19.6). This Page table is referred to check whether the desired Page is available in the MM. The Page Table resides in a part of MM. Thus every Memory access requested by CPU will refer memory twice – once to the page table and second time to get the data from accessed location. This is called the Address Translation Process and is detailed in figure19.7.

| Virtual Page Address | Page Frame Number | Presence bit P | Change bit C | Access Rights |

|---|---|---|---|---|

| A | 0004000 | 1 | 0 | R, X |

| B | 0645728 | 0 | 1 | R, W, X |

| D | 0010234 | 1 | 1 | R, W, X |

| F | 0060216 | 0 | 0 | R |

Page size determination is an important factor to obtain Maximum Page Hits and Minimum Thrashing. Thrashing is very costly in VM as it means getting data from Disk, which is 1000 times likely to be slower than MM.

In the Paging Mechanism, Page Frames of fixed size are allotted. There is a possibility that some of the pages may have contents less than the page size, as we have in our printed books. This causes unutilized space (fragment) in a page frame. By no means, this unutilized space is usable for any other purpose. Since these fragments are inside the allotted Page Frame, it is called Internal Fragmentation.

Additional Activities in Address Translation

During address translation, few more activities happen as listed below but are not shown in figures ( 19.4 and 19.7), for simplicity of understanding.

- In segmentation, the length of the segment mentioned in the segment table is compared with the offset. If the Offset exceeds it is a Segment Violation and an error is generated to this effect.

- The control bits are meant to be used during Address Translation.

- The presence bit is verified to know that the requested segment/page is available in the MM.

- The Change bit indicates that the segment/page in main memory is not a true copy of that in Disk; if this segment/page is a candidate for replacement, it is to be written onto the disk before replacement. This logic is part of the Address Translation mechanism.

- Segment/Page access rights are checked to verify any access violation. Ex: one with Read-only attribute cannot be allowed access for WRITE, or so.

- The requested Segment/Page not in the respective Table, it means, it is not available in MM and a Segment/Page Fault is generated. Subsequently what happens is,

- The OS takes over to READ the segment/page from DISK.

- A Segment needs to be allotted from the available free space in MM. If Paging, an empty Page frame need to be identified.

- In case, the free space/Page frame is unavailable, Page Replacement algorithm plays the role to identify the candidate Segment/Page Frame.

- The Data from Disk is written on to the MM

- The Segment /Page Table is updated with the necessary information that a new block is available in MM

Translation Look-aside Buffer (TLB)

Every Virtual address Translation requires two memory references,

- once to read the segment/page table and

- once more to read the requested memory word.

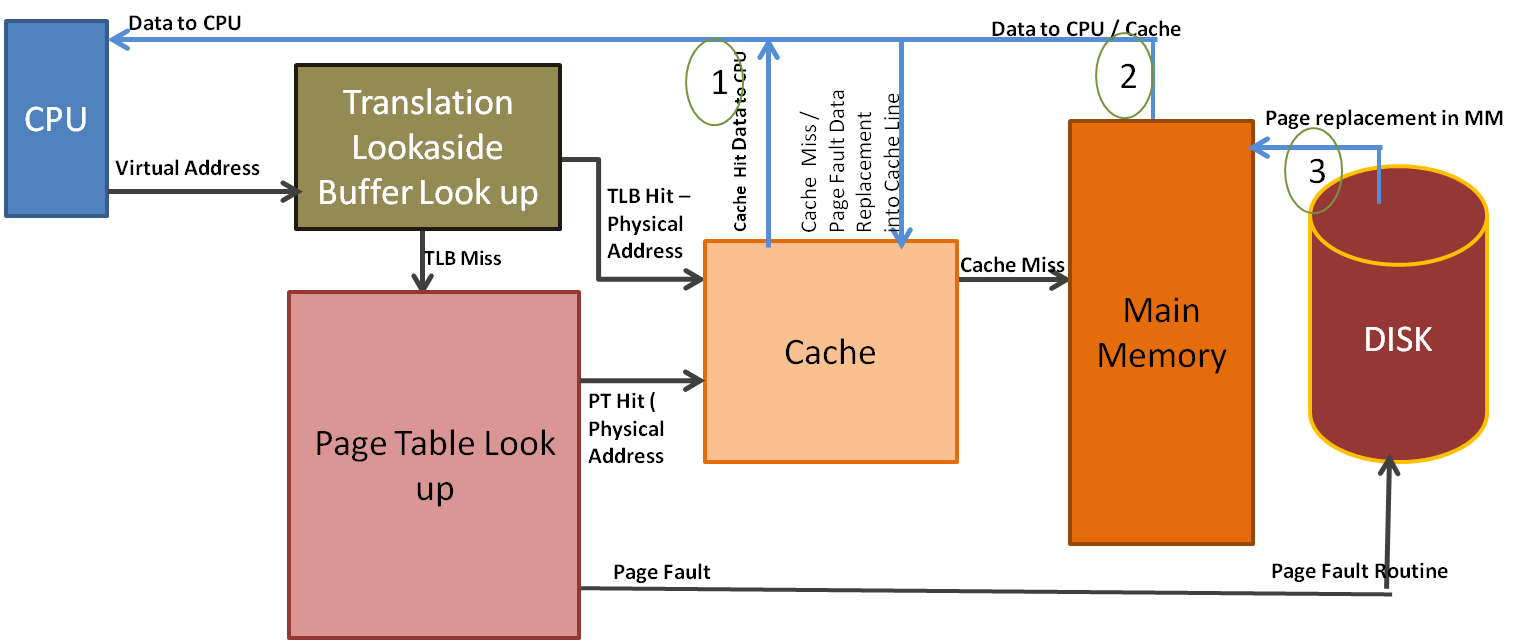

TLB is a hardware functionality designed to speedup Page Table lookup by reducing one extra access to MM. A TLB is a fully associative cache of the Page Table. The entries in TLB correspond to the recently used translations. TLB is sometimes referred to as address cache. TLB is part of the Memory Management Unit (MMU) and MMU is present in the CPU block.

TLB entries are similar to that of Page Table. With the inclusion of TLB, every virtual address is initially checked in TLB for address translation. If it is a TLB Miss, then the page table in MM is looked into. Thus, a TLB Miss does not cause Page fault. Page fault will be generated only if it is a miss in the Page Table too but not otherwise. Since TLB is an associative address cache in CPU, TLB hit provides the fastest possible address translation; Next best is the page hit in Page Table; worst is the page fault.

Having discussed the various individual Address translation options, it is to be understood that in a Multilevel Hierarchical Memory all the functional structures coexist. i.e. TLB, Page Tables, Segment Tables, Cache (Multiple Levels), Main Memory and Disk. Page Tables can be many and many levels too, in which case, few Page tables may reside in Disk. In this scenario, what is the hierarchy of verification of tables for address translation and data service to the CPU? Refer figure19.8.

Address Translation verification sequence starts from the lowest level i.e.

TLB -> Segment / Page Table Level 1 -> Segment / Page Table Level n

Once the address is translated into a physical address, then the data is serviced to CPU. Three possibilities exist depending on where the data is.

Case 1 - TLB or PT hit and also Cache Hit - Data returned from CPU to Cache

Case 2 - TLB or PT hit and Cache Miss - Data returned from MM to CPU and Cache

Case 3 - Page Fault - Data from disk loaded into a segment / page frame in MM; MM returns data to CPU and Cache

It is simple, in case of Page hit either Cache or MM provides the Data to CPU readily. The protocol between Cache and MM exists intact. If it is a Segment/Page fault, then the routine is handled by OS to load the required data into Main Memory. In this case, data is not in the cache too. Therefore, while returning data to CPU, the cache is updated treating it as a case of Cache Miss.

Advantages of Virtual Memory

- Generality - ability to run programs that are larger than the size of physical memory.

- Storage management - allocation/deallocation either by Segmentation or Paging mechanisms.

- Protection - regions of the address space in MM can selectively be marked as Read Only, Execute,..

- Flexibility - portions of a program can be placed anywhere in Main Memory without relocation

- Storage efficiency -retain only the most important portions of the program in memory

- Concurrent I/O -execute other processes while loading/dumping page. This increases the overall performance

- Expandability - Programs/processes can grow in virtual address space.

- Seamless and better Performance for users.