Pipelining increases the performance of the system with simple design changes in the hardware. It facilitates parallelism in execution at the hardware level. Pipelining does not reduce the execution time of individual instructions but reduces the overall execution time required for a program. In a Pipelined design, multiple instructions are executed in a timing state, in an overlapped manner.

We have seen that an instruction cycle consists of a few machine cycles like Instruction Fetch, Decode, Execute, Result Writing, etc. A functional unit is designed to take care of each machine cycle operation. These functional units work independently but simultaneously with one of the instructions in the pipe. For example, during the instruction fetch machine cycle, the decode unit and execution unit are available for decoding and execution of another instruction; This design helps in achieving overlapped execution and called Pipelined Design.

Figure 15.1 shows the timing requirement for Pipelined vs. non-pipelined execution of 3 instructions as a sample case.

If each instruction execution takes time t for an instruction cycle.

In non-pipelined (no overlap) case, 3t is the time required for the execution of 3 instructions.

With pipelining, the instruction cycle of these instructions is overlapped and may take approximately 1.6t for the 3 instructions.

Thus increased performance in terms of execution time is achieved with pipelining. The details of the implementation are discussed later in the chapter.

Pipelining is achieved by having multiple independent functional units in hardware. These independent functional units are called stages. The instructions enter stage 1, pass through the n stages and exit at the nth stage. The movement of instruction through the stages is similar to entry into a pipe and exit from the pipe. Hence the name Pipelining.

Why is pipelining desirable?

- Pipelining improves the speed of a machine.

- Pipelining facilitates improvements in processing time that would otherwise be unachievable with existing non-pipelined technology.

- Pipeline improves performance by increasing instruction throughput, without decreasing the execution time of each instruction.

Instruction Pipelining Characteristics

A Higher degree of overlapped and simultaneous execution of machine cycles of multiple instructions in a single processor is known as Instruction Pipelining.

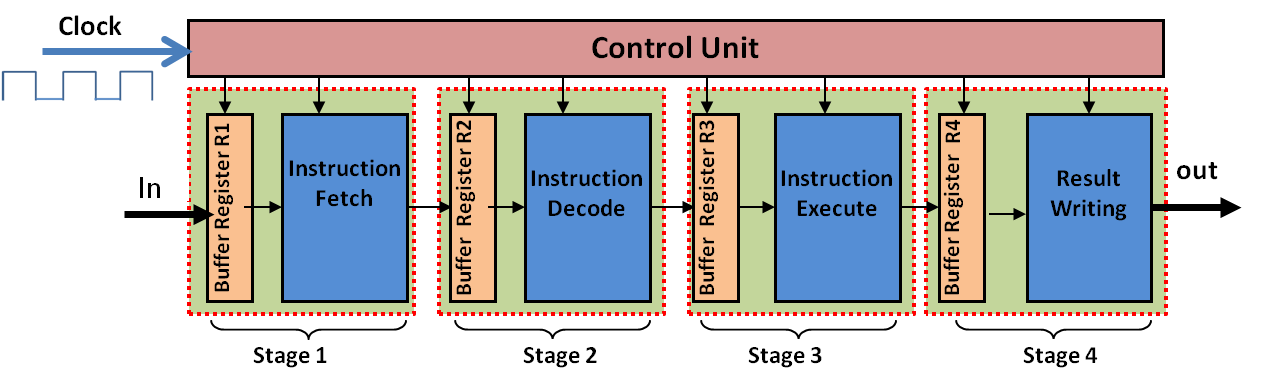

- The hardware of the CPU is split up into several functional units called pipeline stages, each one performing a dedicated and functionally independent task.

- The number of functional units (stages) may vary from processor to processor.

- The number of stages in the pipeline equals the number of processes that can be independently simultaneously executed in the CPU (refer to figure 15.3). Generally, this also means that as many stages are there, as many instructions are simultaneously executed in CPU.

- Each stage corresponds to one machine cycle.

- The length of the machine cycle is determined by the time required for the slowest pipe stage. i.e the machine cycle time is large enough that all the stages finish their functions/computations.

- Control unit manages all the stages using control signals.

- There is a buffer register associated with each stage that holds the data.

- The CPU clock synchronizes the working of all the stages.

- At the beginning of each clock cycle, each stage takes the input from its buffer-in register.

- Each stage then processes the data and stores the temporary result in the buffer-out register which also serves as buffer-in register for the next stage. (refer figure 15.2)

Take a minute and observe the phase diagram so that the following discussions are easily corroborated.

As you see from figure 15.3, each clock cycle is referred to as a timing state and is also a machine cycle. The figure demonstrates 5 instructions through a four-stage pipeline. The four stages are Instruction fetch (IF) from memory, Instruction decode (ID) in CPU, Instruction execution (IE) in ALU and Result writing (RW) in memory or Register. Since there are four stages, all the instructions pass through the four stages to complete the instruction execution.

Instruction 1 gets completed in t4. At t1, while Instruction1 is in IF stage, that being the first stage for any execution, there is no possibility of overlapping another instruction. Thus when Instruction1 moves on to stage 2 (ID)at t2, second instruction enters the pipeline at IF stage. You may observe at t2, there are two stages busy with one each instruction. Similarly, at t3, I1 and I2 move on to IE and ID stages respectively and I3 enters IF and so on. You may observe that from t4 onwards maximum stages are utilised until the instruction in-flow.

After t4, executed instructions leave the pipeline one in each timing state. Refer to the phase diagram to observe that from timing state t5, one each instruction completes the execution and out of pipeline paving way for new instructions if any to enter in.

Although theoretically, all the machine cycles are identically timed, in practical implementation it is not perfectly balanced. Two examples may be given.

- Instruction decode is simple static decoding and requires little time equal to the gate delay of the circuit involved

- The time required for Memory operations are always decided by the memory for two reasons – memory is generally shared for access by CPU and IO subsystems; memory is a slow device than CPU. It is the memory that limits the CPU throttle. For these reasons memory cycles like instruction fetch and result writing in memory are likely to get extended timing.

It is important to note the performance. Refer to the phase diagram, in 8 clock cycles, 5 instructions have got executed in a four-stage pipelined design. The same would have taken 20 (5 instructions x 4 cycles for each) clock cycles in a non pipelined architecture. The performance improvement depends on the number of stages in the design.

Performance Measures of Instruction Pipelining

Generally discussed three measures are:

- Latency

- Throughput

- Speed Up

The latency of a pipeline is defined as the Time required for an instruction to propagate through the pipeline. Its unit is time unit like microseconds, nanoseconds.

Latency is higher if there are lots of exceptions/hazards in the pipeline.

Throughput of a pipeline is the rate at which instructions can start and finish i.e. the number of instructions finished per second.

Throughput is inversely proportional to Latency; More the latency less is the throughput.

Speedup - We claim pipelined design is faster than non-pipelined design. During design to take a call on how many stages to be incorporated, the designer needs a quantitative measure. The speedup is a quantitative measure of the performance achievement, generally compare with non pipelined scenario.

Average Execution time per instruction – It is roughly calculated as below. Another purpose of pipelined architecture is to avail reduced clock timings and hence reduced average execution time per instruction.

Normally Cycles per Instruction (CPI) is required as CPI is used as an abstract measure in many calculations for comparison between CPUs. Generally, a pipelined architecture is designed to yield performance of one instruction getting executed in one clock cycle i.e One CPI performance.

Applications of Pipelined Design Concept

The Concept of Pipelining is applicable in

- Instruction level Parallelism – several instructions executed in an overlapped manner in some sequence

- Arithmetic Computation parallelism - same computation carried out on several datasets in parallel using multiple units

- Memory access - using a kind of burst mode, several consecutive locations are accessed with speedup