In a general trend, the technological advancements in CPU speedup are very much higher than that of Memory. To reduce this gap, it is imminent to find a solution that will increase the data throughput between memory and CPU. Otherwise, we will not be able to meet the demand for processing capacity and storage requirements of applications.

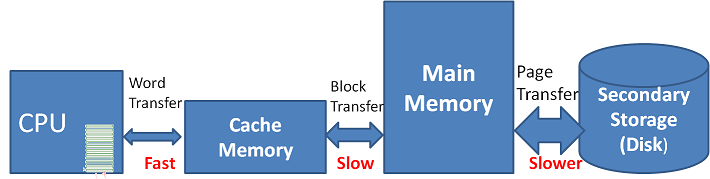

We have already seen in the previous chapter that a hierarchical memory design is one of the useful approaches. A Cache Memory approach increases the system throughput and Virtual Memory incorporation extends the effective size of memory. A logical and functional way of looking at the positions of Cache and Virtual Memory is shown in figure 18.1. But do remember, the Cache is physically part of the CPU although shown apart in the diagram. Both Cache and Virtual Memory together provide a cost-effective solution on their part.

Principle of Cache and Virtual Memory

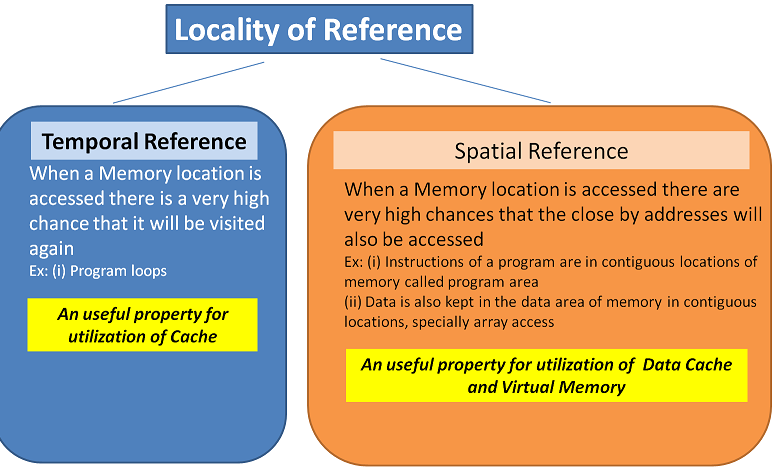

- Principle of Locality of Reference - Once a location is accessed, then there is a very high chance that either the same location (Temporal) or adjacent locations (spatial) are likely to be referenced sooner. Refer to figure 18.2.

The Locality of Reference depicts the way programs are written and machine code is generated. After all, a program runs on a dataset of some large size (Datafile). In such a case, if a design is carried out to provide fast access to CPU for such locations, then the effective throughput of the system is realised. Cache Memory serves this purpose by holding a replica of memory in a small capacity. Secondary storage acts as virtual memory for main memory with its large capacity.

Figure 18.2 Facts about Locality of Reference - In a hierarchical memory, a very important property is that the lower level holds a subset of information from the upper level as a replica. i.e. Cache is a fast but small capacity memory which holds a subset of information from main memory. Main memory holds a subset of information from Virtual memory secondary storage.

Cache Memory

Cache Memory is a small capacity but fast access memory which is functionally in between CPU and Memory and holds the subset of information from main memory, which is most likely to be required by the CPU immediately. The analogy helps understand the role of Cache.

Analogy

We have super markets in our locality and petty shops in our street.

You go to petty shop when you quickly want to get few things. You go to super markets and buy many items in large quantities.

Now, the petty shop owner understands the locality's requirements and stocks those items. The super market holds almost every thing that you ever need.

The petty shop fellow needs to replenish his stock based on demand and fast moving item, else the shop is useless. Obviously he will replenish from the main stores like super market.

He has to have a strategy to identify the items, time the replacement and method for procurement.

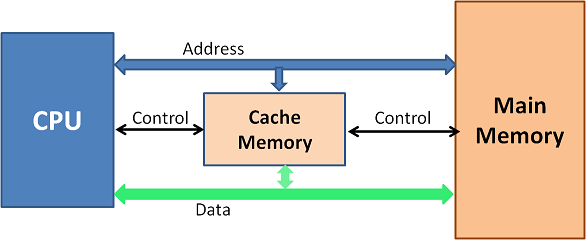

In simple terms, every memory access is first examined for the presence in the Cache. Figure 18.3 envisions the access path between CPU, Cache and Main Memory.

When the CPU initiates READ on a memory location,

- It is checked and determined whether it is available in the Cache

- If available, at the access rate of Cache, the data is returned to CPU

- If not available, access is made to main memory. The particular word is supplied to CPU and also written in the Cacheline of the Cache. (This is required keeping in line with the Principle of Locality of Reference)

When a write is initialised by CPU, the procedure is complicated as the update has to happen at both Cache and Main memory to maintain coherence. A policy to handle this scenario is required and we discuss this later in the chapter.

Cache Terms to be familiarized

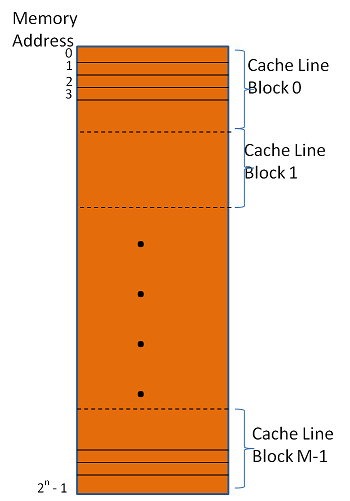

- A Cache is organized in units of Blocks called Cache Lines. Common block sizes could be 16, 32, and 64 bytes or so. A Cacheline (Block) is the smallest unit of data movement between a cache and the Main memory. Always the movement is one block at a time.

- Memory is also viewed as an organization of blocks from the Cache perspective to facilitate block transfer in terms of cache line between memory and Cache and to maintain data coherence. If the block size is 16, then the first 15 space of memory are a part of block 0, bytes 16-31 are in block 1, etc. Refer to figure 18.4.

- Big blocks uses spatial locality better.

- A Hit occurs when a memory reference by CPU is found in the Cache. Not found in Cache is a Miss.

- The hit rate is the fraction of memory references that are Hits. A cache is designed to achieve at least a 90 % hit rate. The miss rate is (1 - hit rate), which is the fraction of references that are misses.

- The Hit time is the time required to service CPU in the case of a hit.

- The Miss time is the time required to service CPU in the case of a miss.

- The Miss penalty is (Miss time - Hit time). Penalty to be reduced with a higher hit rate.

Parameters of Cache Design

- Cache Size (Capacity) – Criteria is to have an average cost per bit close to that of Main Memory and average access time close to that of the fast Cache Memory.

- Cache Line (Block) Size

The Cache Size and Cache line size are decided by the designer based on acceptable performance. The width of the cache has few components.

Width of Cache = Tag size + control bits + Block size

Tag size depends on the cache line size and memory size.

- Mapping Function – Mapping is the basis by which the cache blocks are referenced to main memory blocks. There are three basic mapping methods:

- Direct Mapping

- Associative mapping

- Set associative mapping

- Hit Logic – Extra hardware is required to locate the word in the cache as a Hit or Miss and hence subsequent actions may follow.

- Placement Strategy - Where to fill the blocks from main memory into the cache lines of cache memory requires a strategy and is decided by the mapping function. Placement strategy comes into picture when not all cache blocks are full with valid data.

- Block Replacement Algorithms – There is a Miss and a data block is to be placed into the cache. But all cache blocks have valid data. Therefore a decision to be taken about the candidate block for replacement. Most popular Replacement algorithms are:

- Random

- Least Recently Used (LRU)

- First In First Out (FIFO)

- Least Frequently Used (LFU)

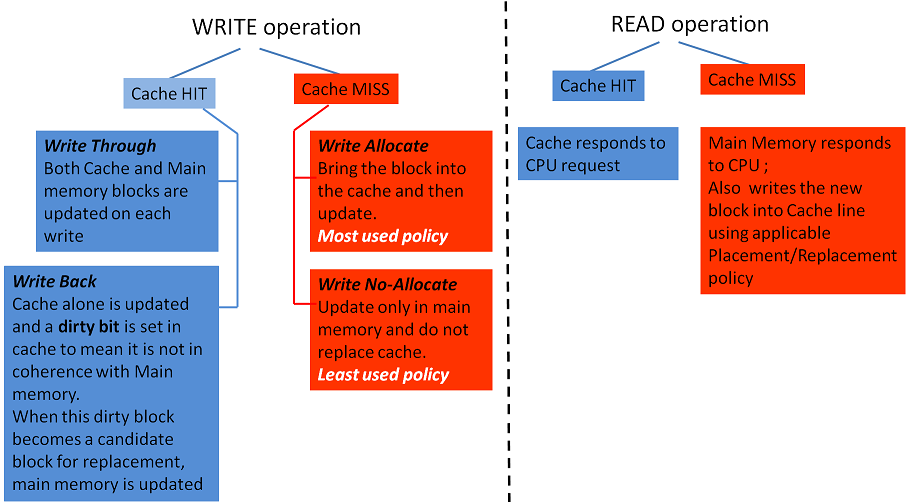

- Write Policy - In the case of WRITE HIT access on to the Cache, it is not enough to update the Cache alone, the main memory copy is also required to be updated to maintain replica. So a policy is required to manage this. The choices are as below.

Mapping Functions

It is important to learn and understand how the blocks are referred by cache and maintained in case of MISS and HIT. We will take a sample scenario as below and get the logic explained for Direct mapped Cache, Associative Cache and Set associative Cache.

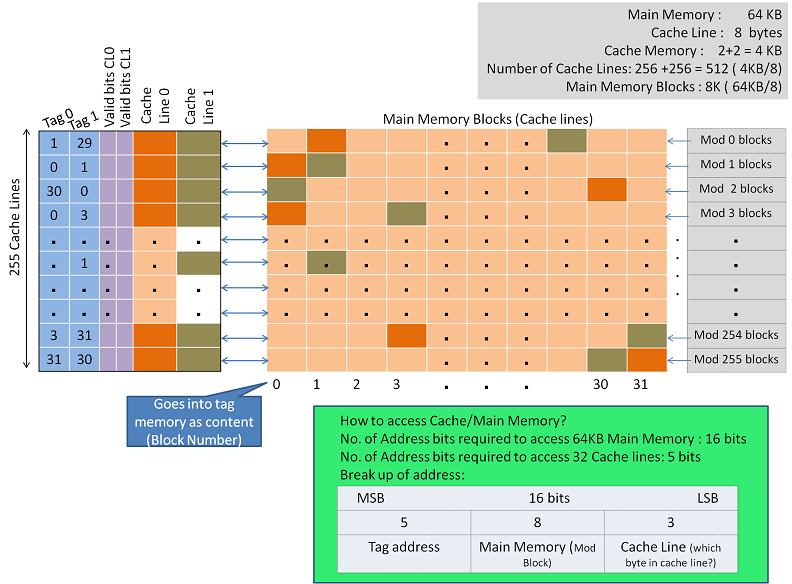

Main memory : 64KB Cache Line : 8 bytes Cache Memory : 2KB Number of cache lines : 256 (2KB/8) Main memory blocks : 8K (64KB/8)

Each cache line is associated with a VALID BIT, to indicate that the cache is a replica of MM (Main memory). The tag memory holds information about MM block that is present in the cache line. With this information infrastructure, the Address Translation Mechanism, the Write policy, Block Placement and Replacement Policies, the cache memory is used seamlessly by the CPU.

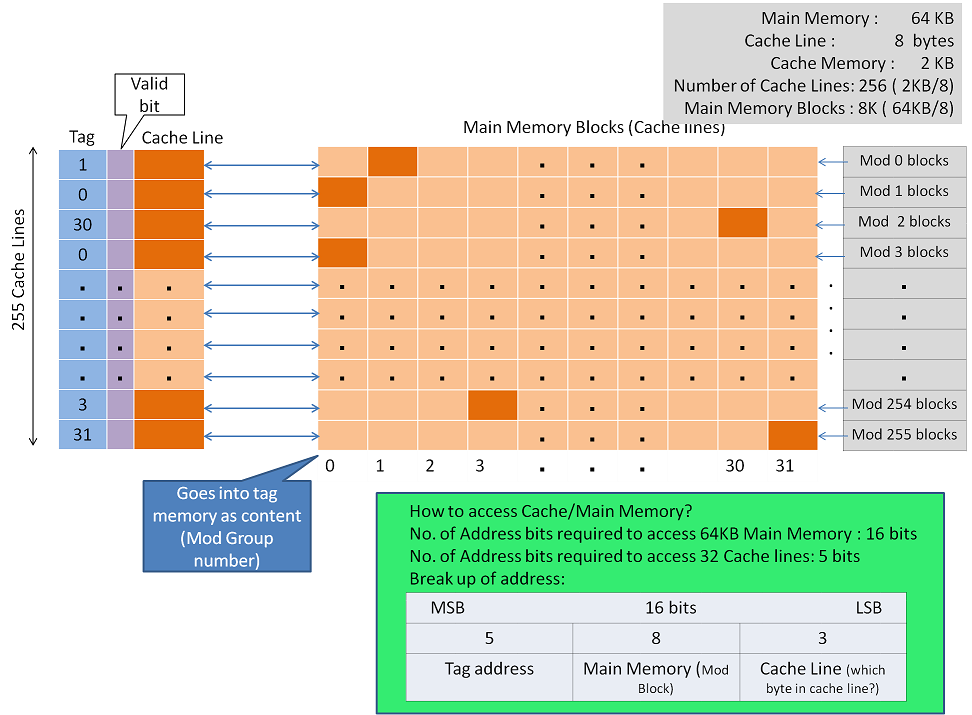

Direct Mapped Cache

It is a direct mapping between a set of memory blocks and a cache line. The set of memory blocks that correspond to a cache line are decided by a mod factor. The mod value is derived by dividing the number of cache lines in Main Memory by those in the cache memory. The modulo operation implies a repetitive structure. Refer to figure 18.5.

- Each cache line can have a different main memory block from the eligible Mod group

- The Tag memory stores the Mod group number that the cache line holds. Refer to figure 18.5 to understand how this number is derived and picked.

- Cache Hit Detection:

- From the MM address generated by the CPU (CPU does not generate a separate address form cache access), using the mod function, identify the tag memory location to be accessed ( Refer to the green box in the figure for address translation)

- If the corresponding Valid bit is TRUE, then Compare the tag part of the MM Address with the tag content

- If a match, then HIT, else MISS.

- If it is a HIT, Cache services the CPU by selecting the desired word from the cache line ( recall cache line is larger than one CPU word), else it is a Cache Miss

- Cache MISS Scenario is common to any mapping function. The process proceeds with block replacement strategy. In this case of Direct mapping, the cache line gets replaced with one the desired block from the mod group.

- Advantages of Direct mapped Cache is the simple hardware requirement due to the simple block replacement strategy.

- The disadvantage is frequent "Thrashing" is a possibility. If two blocks from the same mod group are accessed frequently then the cache line also needs to be swapped in as much, causing performance degradation.

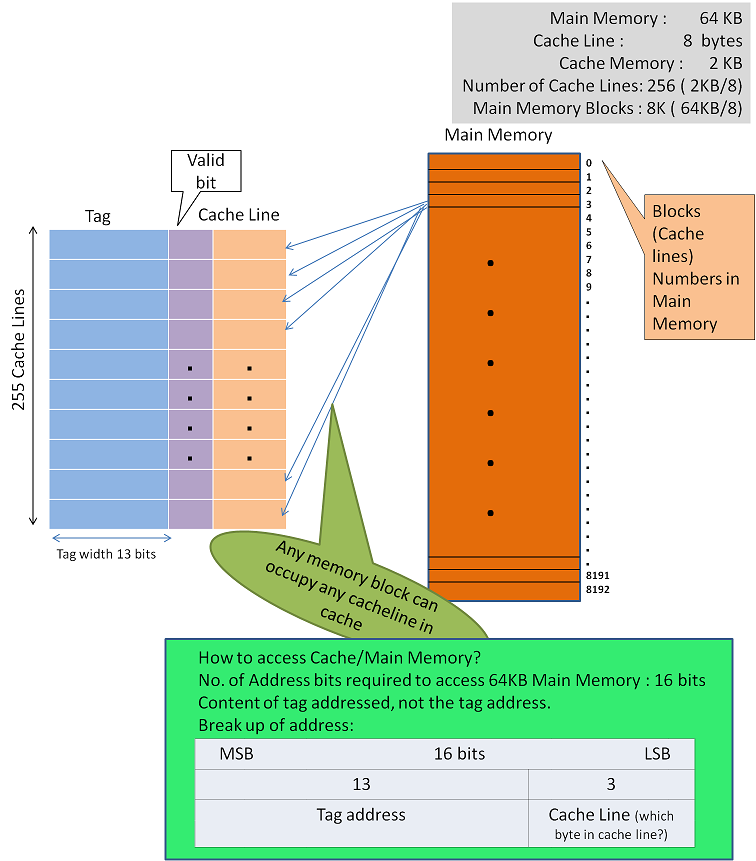

Associative Mapping of Cache

In Associative mapping, any block from main memory can be put in any cache line. The presence of a block is searched by reading the content of all the cache lines. This search happens on the tag memory simultaneously with necessary extra hardware. This mechanism is a kind of Content Addressable memory.

- The tag memory holds the address of the block that the cache line is holding. Therefore the width of the tag memory is equal to the bits that are required to store the maximum block number. ( in this case 8192; hence the width of the tag memory is 13)

- Cache hit Identification:

- By content-addressable mechanism read the contents of the tags simultaneously

- Compare with the block address part of the Memory address generated by CPU

- If anyone of the tag memory location matches, then verify the VALID bit to be TRUE.

- If so, Cache HIT is generated and CPU is serviced by the Cache

- If not it is a MISS

- If the access is a Cache MISS, using the predesigned Replacement Policy, Cache line is filled from main memory and also CPU is serviced.

- Advantage – Most flexible as any block can be mapped to any cache line.

- Disadvantage – Large size (width) of tag memory and extra hardware for content searching.

- Set Associative mapping provides a balanced solution for the above disadvantage.

Set Associative mapping of Cache

It is an extension of the Direct mapped Cache technique by increasing the cache resources. The idea is to allow several cache blocks in each mod group to be present in the cache. If we have n sets of cache, then n blocks from each Mod group are made available thereby increasing the Cache HIT ratio.

The mapping of blocks is direct-mapped while searching the address within the set in Tag memory is associative. Hence the name Set-associative Mapping. This has the speed advantage of Direct mapped cache. Searching the n tag memory content is not as complex as in the case of associative memory.

Designers go up to 16-way set associative to get the best performance. We have limited our example to 2-way to simplify our understanding.

Unlike Direct mapped cache, set-associative mapping requires a defined replacement algorithm strategy.

3Cs of Misses

In a Cache Memory, a MISS occurs for three reasons as below.

- Compulsory Miss – occurs on “Power ON” when the cache is not yet placed with any data.

- Capacity Miss – Bound to happen because more often all the cache blocks are placed with valid data and hence block replacement is necessitated on a miss. This could be avoided by increasing the net size of the cache.

- Conflict Miss – Happens in the case of direct-mapped cache as memory block placement is limited to a particular cache line; if that cache block is not empty the conflict arises. This is called conflict miss. N-way Set Associative Cache memory solves this problem.

Cache Performance

Cache Performance is calculated using the average access time calculated as per the following formula.

Average access time in cache environment

ta = tc x h + tm(1 - h)

where tc - Access time of cache memory tm - Access time of main memory h - hit ratio of cache

Case Study:

In a cache memory implementation if cache miss rate is 6%, what is the cache hit rate? If access time of cache is 15ns and access time of main memory is is 40ns, what is the average access time achieved in this case?

Cache hit ratio = 100 - Miss Rate = 94 Average access time = (15 x 0.94) + (40 x 0.06) = 16.5ns If the cache hit rate comes down to 90%, then let us see the effect on access time. Average access time = (15 x 0.9) + (40 x 0.1) = 17.5 ns

From the above, it is obvious that increasing the cache hit benefits the performance. You may also realize that when the cache hit ratio is below 90%, the performance drastically reduces, almost nullifying the presence of cache.